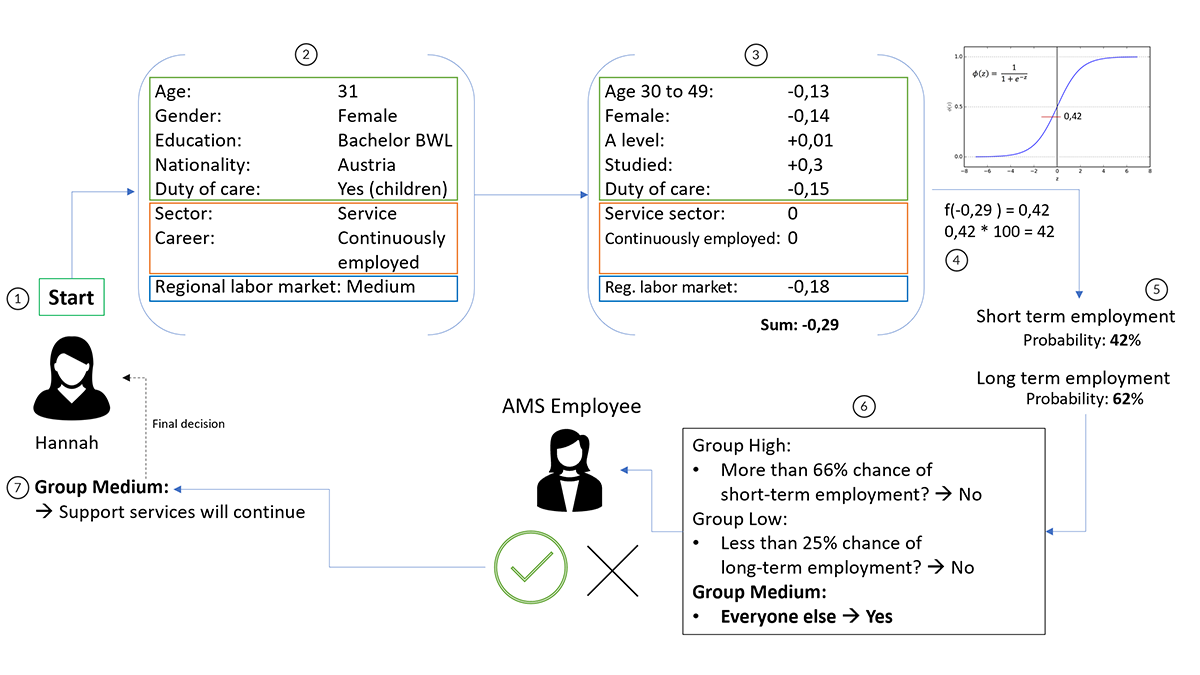

Have you ever been scored or classified by an algorithm? Chances are that you have, but do not necessarily know about it, just like many other individuals who, for example, were scored for loan approval or social services eligibility. And even if you knew that an algorithm was used to decide over you: How could you understand how this decision was made? And how could you tell if the decision was fair or not?

In our paper, we address these questions by taking a closer look at how explanations can support understanding of algorithmic systems, using a framework from the learning sciences called the “six facets of understanding”. In a second step, we investigate how this understanding enables people to take agency, meaning, in our case, if people can assess an algorithm in terms of ethical values (such as: Is it fair or not?). We find that the background of individuals strongly affects how they understand a given explanation, take for example the following two quotes and compare which aspects their understanding touches on:

- I see him almost like me. He’s 49, so for me, he would already count as 50+ and should get extra support. [...] I find the algorithm good and the human decision bad, because the human one goes by numbers and is not accommodating, The algorithm just sees 49 and makes the decision. (P17)

- I understand why the algorithm says Group "Low" in this case. If the job-seeker retrains and can explain his career well I think he could find work, but needs support to do so [...] Personally, I would perhaps not rate him that way, but I think it’s good that he gets into the group. (P6)

The first participant mentions their own personal circumstances and speaks about the difference between human and algorithmic decision-making, while the second takes a more analytical perspective on the decision and the job-seeker’s situation. Two very different approaches that are both valid in assessing the algorithm.

We recommend that future explanation approaches should consider these individual approaches to understanding as variables in their design and further highlight the value of the dialogue explanation modality to invite participants to share more of their understanding and experiences. For our other contributions, we invite you to take a look at the full paper, which is available here:

On the Impact of Explanations on Understanding of Algorithmic Decision-Making

Timothée Schmude, Laura Koesten, Torsten Möller, Sebastian Tschiatschek

In 2023 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’23), June 12–15, 2023, Chicago, IL, USA. ACM, New York, NY, USA, 12 pages.

https://vda.cs.univie.ac.at/research/publications/publication/7661/